环境

- ubuntu 18.04 64bit

- python 3.8

- pytorch1.8.2 + cu111

视频看这里

Youtube

Bilibili

简介

前文 YOLOv7 提到了除目标检测外,未来 YOLOv7 还会在人体姿态估计和实例分割领域得到应用,不过那会作者只开放了姿态估计的模型。好消息是,最近,YOLOv7 作者就放出了实例分割的模型。本文我们就来看看 YOLOv7 在实例分割方面的表现。

实践

实例分割的代码存放在分支 mask 里,目前还是在不断完善中,我们直接下载分支上最新的代码

git clone https://github.com/WongKinYiu/yolov7.git -b mask yolov7-mask

cd yolov7-mask分割的部分依赖于 facebook 的 detectron2,而 detectron2 要求 torch 版本大于 1.8,由于之前我一直用的都是 1.7.1,因此这里需要创建一个新的环境

# 创建新的虚拟环境

conda create -n pytorch1.8 python=3.8

conda activate pytorch1.8

# 安装 torch 1.8.2

pip install torch==1.8.2 torchvision==0.9.2 torchaudio===0.8.2 --extra-index-url https://download.pytorch.org/whl/lts/1.8/cu111

# 修改requirements.txt,将其中的torch和torchvision注释掉

pip install -r requirements.txt

# 安装detectron2

git clone https://github.com/facebookresearch/detectron2

cd detectron2

python setup.py install

cd ..然后去下载实例分割的模型 https://github.com/WongKinYiu/yolov7/releases/download/v0.1/yolov7-mask.pt,将模型放入源码目录中

分割的示例代码在 tools/instance.ipynb,可以在 jupyter notebook 中直接运行

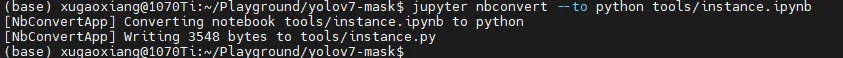

如果需要将 ipynb 文件转成 python 文件,就执行

jupyter nbconvert --to python tools/instance.ipynb

将生成的 tools/instance.py 拷贝到源码根目录下,然后修改文件的最后显示部分为保存结果图片

import matplotlib.pyplot as plt

import torch

import cv2

import yaml

from torchvision import transforms

import numpy as np

from utils.datasets import letterbox

from utils.general import non_max_suppression_mask_conf

from detectron2.modeling.poolers import ROIPooler

from detectron2.structures import Boxes

from detectron2.utils.memory import retry_if_cuda_oom

from detectron2.layers import paste_masks_in_image

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

with open('data/hyp.scratch.mask.yaml') as f:

hyp = yaml.load(f, Loader=yaml.FullLoader)

weigths = torch.load('yolov7-mask.pt')

model = weigths['model']

model = model.half().to(device)

_ = model.eval()

image = cv2.imread('inference/images/horses.jpg') # 504x378 image

image = letterbox(image, 640, stride=64, auto=True)[0]

image_ = image.copy()

image = transforms.ToTensor()(image)

image = torch.tensor(np.array([image.numpy()]))

image = image.to(device)

image = image.half()

output = model(image)

inf_out, train_out, attn, mask_iou, bases, sem_output = output['test'], output['bbox_and_cls'], output['attn'], output['mask_iou'], output['bases'], output['sem']

bases = torch.cat([bases, sem_output], dim=1)

nb, _, height, width = image.shape

names = model.names

pooler_scale = model.pooler_scale

pooler = ROIPooler(output_size=hyp['mask_resolution'], scales=(pooler_scale,), sampling_ratio=1, pooler_type='ROIAlignV2', canonical_level=2)

output, output_mask, output_mask_score, output_ac, output_ab = non_max_suppression_mask_conf(inf_out, attn, bases, pooler, hyp, conf_thres=0.25, iou_thres=0.65, merge=False, mask_iou=None)

pred, pred_masks = output[0], output_mask[0]

base = bases[0]

bboxes = Boxes(pred[:, :4])

original_pred_masks = pred_masks.view(-1, hyp['mask_resolution'], hyp['mask_resolution'])

pred_masks = retry_if_cuda_oom(paste_masks_in_image)( original_pred_masks, bboxes, (height, width), threshold=0.5)

pred_masks_np = pred_masks.detach().cpu().numpy()

pred_cls = pred[:, 5].detach().cpu().numpy()

pred_conf = pred[:, 4].detach().cpu().numpy()

nimg = image[0].permute(1, 2, 0) * 255

nimg = nimg.cpu().numpy().astype(np.uint8)

nimg = cv2.cvtColor(nimg, cv2.COLOR_RGB2BGR)

nbboxes = bboxes.tensor.detach().cpu().numpy().astype(np.int)

pnimg = nimg.copy()

for one_mask, bbox, cls, conf in zip(pred_masks_np, nbboxes, pred_cls, pred_conf):

if conf < 0.25:

continue

color = [np.random.randint(255), np.random.randint(255), np.random.randint(255)]

pnimg[one_mask] = pnimg[one_mask] * 0.5 + np.array(color, dtype=np.uint8) * 0.5

pnimg = cv2.rectangle(pnimg, (bbox[0], bbox[1]), (bbox[2], bbox[3]), color, 2)

#label = '%s %.3f' % (names[int(cls)], conf)

#t_size = cv2.getTextSize(label, 0, fontScale=0.5, thickness=1)[0]

#c2 = bbox[0] + t_size[0], bbox[1] - t_size[1] - 3

#pnimg = cv2.rectangle(pnimg, (bbox[0], bbox[1]), c2, color, -1, cv2.LINE_AA) # filled

#pnimg = cv2.putText(pnimg, label, (bbox[0], bbox[1] - 2), 0, 0.5, [255, 255, 255], thickness=1, lineType=cv2.LINE_AA)

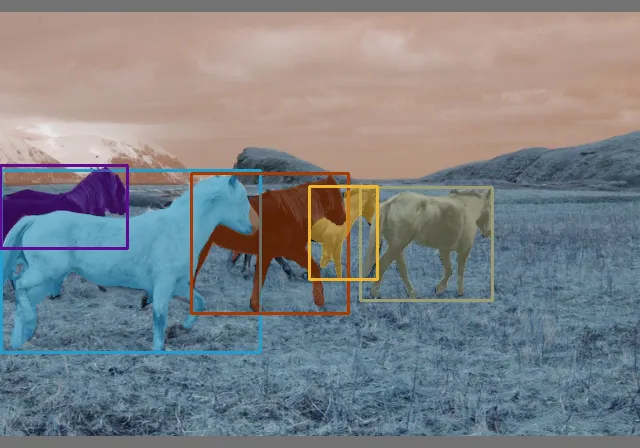

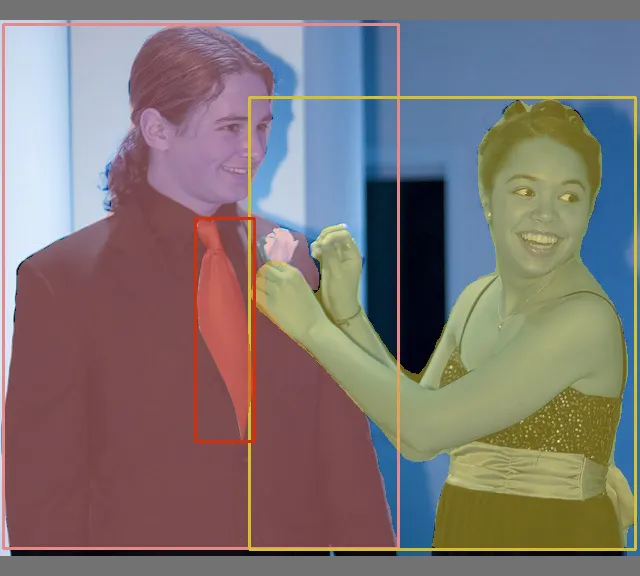

cv2.imwrite("instance_result.jpg", pnimg)找了2张测试图片,看看效果

同样的,这里也提供一份视频文件或摄像头检测的代码

import torch

import cv2

import yaml

from torchvision import transforms

import numpy as np

from utils.datasets import letterbox

from utils.general import non_max_suppression_mask_conf

from detectron2.modeling.poolers import ROIPooler

from detectron2.structures import Boxes

from detectron2.utils.memory import retry_if_cuda_oom

from detectron2.layers import paste_masks_in_image

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

with open('data/hyp.scratch.mask.yaml') as f:

hyp = yaml.load(f, Loader=yaml.FullLoader)

weigths = torch.load('yolov7-mask.pt')

model = weigths['model']

model = model.half().to(device)

_ = model.eval()

cap = cv2.VideoCapture('vehicle_test.mp4')

if (cap.isOpened() == False):

print('open failed.')

exit(-1)

# 分辨率

frame_width = int(cap.get(3))

frame_height = int(cap.get(4))

# 图片缩放

vid_write_image = letterbox(cap.read()[1], (frame_width), stride=64, auto=True)[0]

resize_height, resize_width = vid_write_image.shape[:2]

# 保存结果视频

out = cv2.VideoWriter("result_instance.mp4",

cv2.VideoWriter_fourcc(*'mp4v'), 30,

(resize_width, resize_height))

while(cap.isOpened):

flag, image = cap.read()

if flag:

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

image = letterbox(image, frame_width, stride=64, auto=True)[0]

image_ = image.copy()

image = transforms.ToTensor()(image)

image = torch.tensor(np.array([image.numpy()]))

image = image.to(device)

image = image.half()

with torch.no_grad():

output = model(image)

inf_out, train_out, attn, mask_iou, bases, sem_output = output['test'], output['bbox_and_cls'], output['attn'], output['mask_iou'], output['bases'], output['sem']

bases = torch.cat([bases, sem_output], dim=1)

nb, _, height, width = image.shape

names = model.names

pooler_scale = model.pooler_scale

pooler = ROIPooler(output_size=hyp['mask_resolution'], scales=(pooler_scale,), sampling_ratio=1, pooler_type='ROIAlignV2', canonical_level=2)

output, output_mask, output_mask_score, output_ac, output_ab = non_max_suppression_mask_conf(inf_out, attn, bases, pooler, hyp, conf_thres=0.25, iou_thres=0.65, merge=False, mask_iou=None)

pred, pred_masks = output[0], output_mask[0]

base = bases[0]

bboxes = Boxes(pred[:, :4])

original_pred_masks = pred_masks.view(-1, hyp['mask_resolution'], hyp['mask_resolution'])

pred_masks = retry_if_cuda_oom(paste_masks_in_image)( original_pred_masks, bboxes, (height, width), threshold=0.5)

pred_masks_np = pred_masks.detach().cpu().numpy()

pred_cls = pred[:, 5].detach().cpu().numpy()

pred_conf = pred[:, 4].detach().cpu().numpy()

nimg = image[0].permute(1, 2, 0) * 255

nimg = nimg.cpu().numpy().astype(np.uint8)

nimg = cv2.cvtColor(nimg, cv2.COLOR_RGB2BGR)

nbboxes = bboxes.tensor.detach().cpu().numpy().astype(np.int)

pnimg = nimg.copy()

for one_mask, bbox, cls, conf in zip(pred_masks_np, nbboxes, pred_cls, pred_conf):

if conf < 0.25:

continue

color = [np.random.randint(255), np.random.randint(255), np.random.randint(255)]

pnimg[one_mask] = pnimg[one_mask] * 0.5 + np.array(color, dtype=np.uint8) * 0.5

pnimg = cv2.rectangle(pnimg, (bbox[0], bbox[1]), (bbox[2], bbox[3]), color, 2)

cv2.imshow('YOLOv7 mask', pnimg)

out.write(pnimg)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

else:

break

cap.release()

cv2.destroyAllWindows()