软硬兼环境

- windows 10 64bit

- nivdia gtx 1066

- opencv 4.4.0

简介

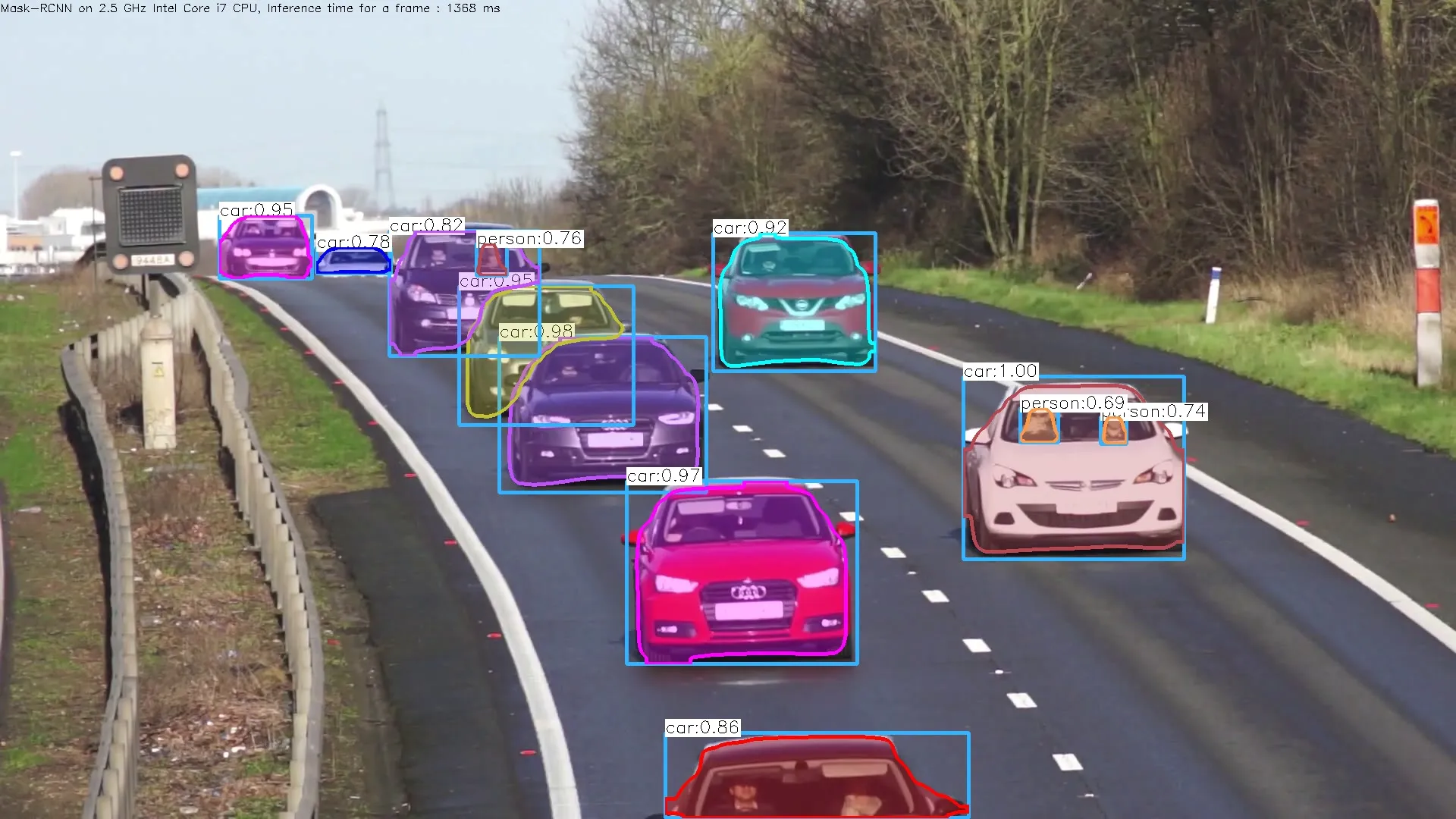

Mask R-CNN 是在原有的 R-CNN 基础上实现了区域 ROI 的像素级别分割。tensorflow 框架有个扩展模块叫做 models,里面包含了很多预训练的网络模型,提供给开发者直接使用或者迁移学习使用,tensorflow object detection model zone 中现在有四个使用不同骨干网(InceptionV2,ResNet50,ResNet101和Inception-ResnetV2)的 Mask R-CNN 模型,这些模型都是在 MSCOCO 数据集上训练出来的,其中使用 Inception 的模型是这四个中最快的,本文也是使用该模型。

模型下载

首先需要下载 Mask R-CNN 网络模型,地址是: http://download.tensorflow.org/models/object_detection/mask_rcnn_inception_v2_coco_2018_01_28.tar.gz, 下载后解压出里面的模型文件 frozen_inference_graph.pb

代码示例

import cv2 as cv

import argparse

import numpy as np

import os.path

import sys

import random

# 设置目标检测的置信度阈值和Mask二值化分割阈值

confThreshold = 0.5

maskThreshold = 0.3

parser = argparse.ArgumentParser(description='Use this script to run Mask-RCNN object detection and segmentation')

parser.add_argument('--image', help='Path to image file')

parser.add_argument('--video', help='Path to video file.')

args = parser.parse_args()

# 画bounding box

def drawBox(frame, classId, conf, left, top, right, bottom, classMask):

# Draw a bounding box.

cv.rectangle(frame, (left, top), (right, bottom), (255, 178, 50), 3)

label = '%.2f' % conf

if classes:

assert (classId < len(classes))

label = '%s:%s' % (classes[classId], label)

# Display the label at the top of the bounding box

labelSize, baseLine = cv.getTextSize(label, cv.FONT_HERSHEY_SIMPLEX, 0.5, 1)

top = max(top, labelSize[1])

cv.rectangle(frame, (left, top - round(1.5 * labelSize[1])), (left + round(1.5 * labelSize[0]), top + baseLine),

(255, 255, 255), cv.FILLED)

cv.putText(frame, label, (left, top), cv.FONT_HERSHEY_SIMPLEX, 0.75, (0, 0, 0), 1)

# Resize the mask, threshold, color and apply it on the image

classMask = cv.resize(classMask, (right - left + 1, bottom - top + 1))

mask = (classMask > maskThreshold)

roi = frame[top:bottom + 1, left:right + 1][mask]

# color = colors[classId%len(colors)]

# Comment the above line and uncomment the two lines below to generate different instance colors

colorIndex = random.randint(0, len(colors) - 1)

color = colors[colorIndex]

frame[top:bottom + 1, left:right + 1][mask] = ([0.3 * color[0], 0.3 * color[1], 0.3 * color[2]] + 0.7 * roi).astype(

np.uint8)

# Draw the contours on the image

mask = mask.astype(np.uint8)

contours, hierarchy = cv.findContours(mask, cv.RETR_TREE, cv.CHAIN_APPROX_SIMPLE)

cv.drawContours(frame[top:bottom + 1, left:right + 1], contours, -1, color, 3, cv.LINE_8, hierarchy, 100)

# For each frame, extract the bounding box and mask for each detected object

def postprocess(boxes, masks):

# Output size of masks is NxCxHxW where

# N - number of detected boxes

# C - number of classes (excluding background)

# HxW - segmentation shape

numClasses = masks.shape[1]

numDetections = boxes.shape[2]

frameH = frame.shape[0]

frameW = frame.shape[1]

for i in range(numDetections):

box = boxes[0, 0, i]

mask = masks[i]

score = box[2]

if score > confThreshold:

classId = int(box[1])

# Extract the bounding box

left = int(frameW * box[3])

top = int(frameH * box[4])

right = int(frameW * box[5])

bottom = int(frameH * box[6])

left = max(0, min(left, frameW - 1))

top = max(0, min(top, frameH - 1))

right = max(0, min(right, frameW - 1))

bottom = max(0, min(bottom, frameH - 1))

# Extract the mask for the object

classMask = mask[classId]

# Draw bounding box, colorize and show the mask on the image

drawBox(frame, classId, score, left, top, right, bottom, classMask)

# mscoco_labels.names包含MSCOCO所有标注对象的类名称,共80个类别

classesFile = "mscoco_labels.names"

classes = None

with open(classesFile, 'rt') as f:

classes = f.read().rstrip('\n').split('\n')

# 定义如何加载模型权重

textGraph = "./mask_rcnn_inception_v2_coco_2018_01_28.pbtxt"

# 模型权重

modelWeights = "./frozen_inference_graph.pb"

# 使用dnn模块加载模型

net = cv.dnn.readNetFromTensorflow(modelWeights, textGraph)

# 可以使用GPU或者CPU进行推断

net.setPreferableBackend(cv.dnn.DNN_BACKEND_OPENCV)

net.setPreferableTarget(cv.dnn.DNN_TARGET_CPU)

# colors.txt是在图像上标出实例时,所属类显示的颜色值

colorsFile = "colors.txt";

with open(colorsFile, 'rt') as f:

colorsStr = f.read().rstrip('\n').split('\n')

colors = [] # [0,0,0]

for i in range(len(colorsStr)):

rgb = colorsStr[i].split(' ')

color = np.array([float(rgb[0]), float(rgb[1]), float(rgb[2])])

colors.append(color)

winName = 'Mask-RCNN Object detection and Segmentation in OpenCV'

cv.namedWindow(winName, cv.WINDOW_NORMAL)

# 处理后的结果保存到视频中

outputFile = "mask_rcnn_out.avi"

if (args.image):

# Open the image file

if not os.path.isfile(args.image):

print("Input image file ", args.image, " doesn't exist")

sys.exit(1)

cap = cv.VideoCapture(args.image)

outputFile = args.image[:-4] + '_mask_rcnn_out.jpg'

elif (args.video):

# Open the video file

if not os.path.isfile(args.video):

print("Input video file ", args.video, " doesn't exist")

sys.exit(1)

cap = cv.VideoCapture(args.video)

outputFile = args.video[:-4] + '_mask_rcnn_out.avi'

else:

# Webcam input

cap = cv.VideoCapture(0)

# Get the video writer initialized to save the output video

if (not args.image):

vid_writer = cv.VideoWriter(outputFile, cv.VideoWriter_fourcc('M', 'J', 'P', 'G'), 28,

(round(cap.get(cv.CAP_PROP_FRAME_WIDTH)), round(cap.get(cv.CAP_PROP_FRAME_HEIGHT))))

while cv.waitKey(1) < 0:

# 对每一帧数据进行处理

hasFrame, frame = cap.read()

# Stop the program if reached end of video

if not hasFrame:

print("Done processing !!!")

print("Output file is stored as ", outputFile)

cv.waitKey(3000)

break

# Create a 4D blob from a frame.

blob = cv.dnn.blobFromImage(frame, swapRB=True, crop=False)

# Set the input to the network

net.setInput(blob)

# 得到目标bounding box和mask

boxes, masks = net.forward(['detection_out_final', 'detection_masks'])

# Extract the bounding box and mask for each of the detected objects

postprocess(boxes, masks)

# Put efficiency information.

t, _ = net.getPerfProfile()

label = 'Inference time for a frame : %0.0f ms' % abs(

t * 1000.0 / cv.getTickFrequency())

cv.putText(frame, label, (0, 15), cv.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 0))

# Write the frame with the detection boxes

if (args.image):

cv.imwrite(outputFile, frame.astype(np.uint8));

else:

vid_writer.write(frame.astype(np.uint8))

cv.imshow(winName, frame)上述代码同时支持图片和视频

python demo.py --video test.mp4